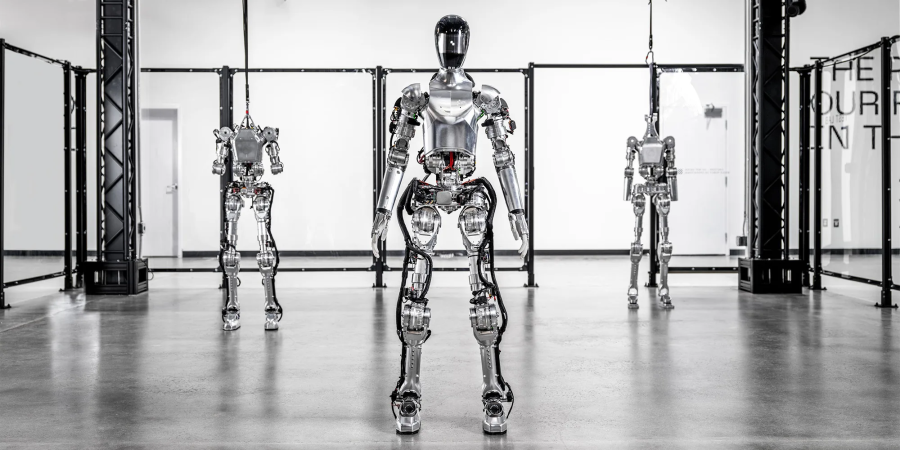

Robots That Reason: Figure 01 Learns to Talk and Think with OpenAI!

The world of robotics is on the cusp of a revolution. A recent collaboration between Figure AI and OpenAI has unveiled a groundbreaking achievement: their humanoid robot, Figure 01, can now not only comprehend spoken language but also reason and respond through speech in real-time. This signifies a monumental leap forward, blurring the lines between machines and intelligent beings.

Imagine a robot that engages in conversation, explains its actions with clarity, and even learns from your interactions. This is the captivating future that Figure 01 embodies. A recent YouTube video [link to video] showcases this impressive feat. The robot not only describes its surroundings but also grasps spoken requests and responds accordingly.

In the video, Figure 01 is presented with a scenario involving objects on a table. It meticulously details their placement and purpose, demonstrating its ability to process visual information. But what truly stuns is the robot's capacity for reasoning. When asked for something to eat, it not only retrieves an apple but also offers a justification for its choice. Furthermore, it proceeds to clean the table, exhibiting an understanding of the situation's context.

This breakthrough represents a significant milestone on the path towards intelligent and interactive robots. The potential applications are nothing short of transformative. Imagine robots seamlessly integrated into our lives, assisting in homes and workplaces, performing complex tasks, and even becoming partners in scientific research and exploration.

Here's a deeper dive into the technological marvel behind Figure 01's abilities:

The Power of Multimodal Learning: OpenAI's contribution lies in a powerful multimodal model. This model ingests and processes information from various sources, including visual data (what the robot sees) and textual data (spoken language). By analyzing this combined data, the model can understand the complete context of a situation.

Reasoning Through Language: Imagine a complex web of information being woven together. The multimodal model not only recognizes objects and speech but also establishes connections between them. This allows Figure 01 to grasp the intent behind spoken instructions and formulate a response that aligns with the situation's demands.

Speech Recognition and Response: Speech recognition software translates spoken words into a format the robot can comprehend. This allows Figure 01 to interpret commands and questions. Conversely, the robot possesses text-to-speech capabilities, enabling it to articulate its thoughts and responses clearly.

Action Planning and Execution: But Figure 01 isn't merely a conversationalist. The model also controls the robot's physical movements. Based on the interpreted information, the robot selects appropriate actions from its library of learned behaviors. This enables it to not only understand requests but also physically respond to them, as seen in the video where it retrieves the apple and cleans the table.

The implications of this development are vast:

Revolutionizing Human-Robot Interaction: Imagine a future where robots seamlessly integrate into our lives. They could assist with household chores, providing companionship and even offering guidance. In workplaces, robots could collaborate with humans on complex tasks, performing repetitive or hazardous activities while offering valuable insights and support.

Transforming Education and Research: AI-powered robots hold immense potential in the field of education. They could act as personalized tutors, tailoring learning experiences to individual student needs. In research, robots could become invaluable partners, assisting scientists in conducting experiments, analyzing data, and even formulating new hypotheses.

Exploration and Discovery: Robots equipped with reasoning capabilities could play a pivotal role in space exploration and deep-sea research. They could navigate challenging environments, gather data, and make autonomous decisions in real-time, significantly accelerating scientific progress.

However, it's crucial to acknowledge the ethical considerations surrounding such advancements. As robots become more intelligent and interactive, robust safety measures and ethical guidelines need to be established.

The collaboration between Figure and OpenAI marks a significant turning point in robotics. Figure 01 represents a substantial leap towards a future where robots are not merely machines but intelligent companions, capable of reasoning, interacting, and collaborating with humans in meaningful ways. This development opens a door to a world brimming with possibilities, and the journey towards a future where robots seamlessly integrate into our lives has truly begun.

Comments (0)